The False Positive Problem in Vulnerability Management

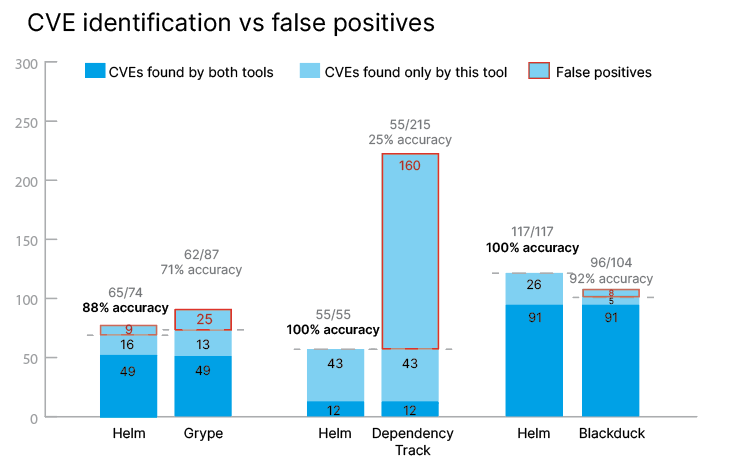

Medical device security teams waste countless hours verifying vulnerability alerts from tools that prioritize quantity over quality. When a vulnerability management tool flags 215 CVEs but only 55 are actually valid, that's not better security—that's 160 false alarms requiring manual verification.

The impact is significant: a security engineer at $150/hour spending 17 hours per assessment on false positive verification equals $2,550 in wasted labor per assessment. For manufacturers conducting 200 assessments annually, that's over $510,000 in verification costs alone.

The root cause? Most vulnerability tools use loose component matching that generates overwhelming numbers of false positives. They match components incorrectly, ignore platform requirements, and flag CVEs for wrong software versions—forcing security teams to spend more time verifying their tools than actually securing their devices.

Rigorous Testing Methodology

We conducted three separate comparative analyses to evaluate how Helm performs against leading vulnerability management tools: Grype, Dependency Track, and Blackduck. Each test used real Software Bills of Materials (SBOMs) from Linux-based medical devices.

Our validation process was thorough. Every CVE identified by any tool underwent rigorous verification to ensure it met the following criteria:

- Vulnerability pertained to a component actually listed in the SBOM

- Vulnerability affected the correct version of that dependency, and

- Vulnerability was relevant to the device's platform.

This eliminated any ambiguity about what constituted a "valid" vulnerability versus a false positive.

We evaluated three critical metrics:

- CVE identification accuracy (how precisely tools match vulnerabilities to components),

- Component matching precision (whether tools correctly identify software components), and

- False positive rates (percentage of flagged vulnerabilities that were irrelevant).

Results: Accuracy Over Quantity

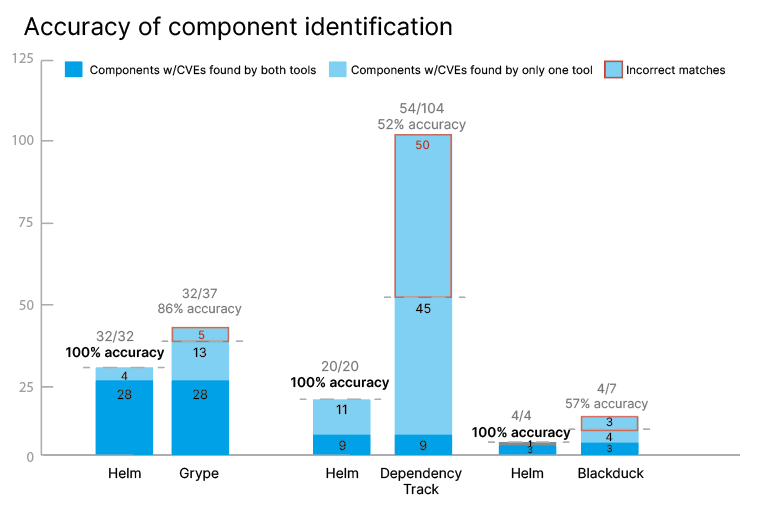

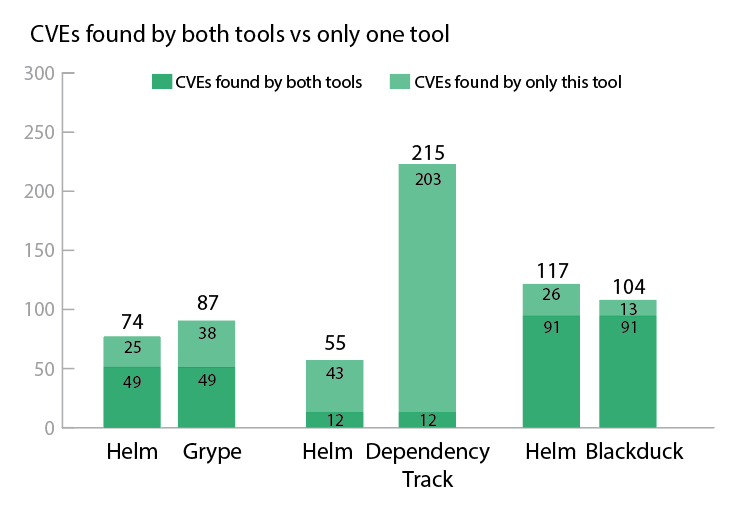

Helm consistently outperformed competitors across all three tests, achieving 96% average accuracy compared to 63-92% for competing tools. The most dramatic difference: Dependency Track flagged 215 CVEs where only 55 were valid—a 74% false positive rate that wastes 17+ hours per assessment. Helm identified the same 55 valid CVEs with zero false positives from component misidentification.

Results: Accuracy Over Quantity

Helm consistently outperformed competitors across all three tests, achieving 96% average accuracy compared to 63-92% for competing tools.

Helm vs Grype

In our comparison with Grype, Helm achieved 88% accuracy versus Grype's 71%. While Grype identified 87 total CVEs, 44% were false positives requiring manual verification. Helm found 74 CVEs with zero false positives from component misidentification—every alert was actionable.

Helm vs Dependency Track

The most dramatic difference emerged in testing against Dependency Track. Both tools identified 55 valid CVEs, but Dependency Track flagged 215 total vulnerabilities—meaning 74% were false positives. The tool's overly permissive fuzzy matching misidentified 50 components, generating cascading false alarms. For example, it matched the npm package "@types/connect" to Adobe Connect software, creating 31 irrelevant CVE alerts. Helm's multi-datapoint validation (verifying component name, version, supplier, platform, and ecosystem) eliminated such errors entirely.

Helm vs Blackduck

Testing against Blackduck showed Helm's perfect component matching accuracy: 117 out of 117 components correctly identified (100%), compared to Blackduck's 96 out of 104 (92% accuracy). While Blackduck performed well overall, even an 8% error rate means 1 in 12 alerts requires manual investigation.

Our Results Speak For Themselves

The efficiency impact is measurable. Security teams using Helm spend zero time verifying false positives, compared to 17.9 hours per assessment with tools like Dependency Track. Helm's automated FDA SBOM generation saves an additional 1-2 weeks per regulatory submission—documentation that competitors require manual effort to produce.

Medcrypt customer Merlin Nunez from Ypsomed confirmed these findings for Dependency Track: "Helm is better organized and the reports it produces are friendlier to humans than Dependency Track. Dependency Track seems to be quite a bit more prone to false positives than Helm."

Beyond accuracy, Helm provides purpose-built features for medical device manufacturers: automated compliance reporting (SBOM, VEX, VDR generation), FDA submission templates created by former FDA reviewers, and intelligent auto-rescoring that adjusts vulnerability severity as threat intelligence evolves. These capabilities—backed by Medcrypt's 100% FDA submission success rate across 140+ medical device manufacturers—demonstrate that accuracy and compliance efficiency work together.

Helm is better organized and the reports it produces are friendlier to humans than Dependency Track. Also, the Alias feature in Helm is not present in Dependency Track which is a big point for us. Dependency Track seems to be quite a bit more prone to false positives than Helm.

— Merlin Nunez, Engineer, Ypsomed